Unpacking the Transformer: The AI Breakthrough That Changed Everything

by Pradyuman

Alright, so I’ve had this paper, “Attention is All You Need” sitting in my reading list for a solid six months now. Finally decided to check it out today. It’s not super hard to understand, but I did have to ask ChatGPT for a little help. Funny enough, ChatGPT is actually built on the transformer architecture the paper talks about. Pretty cool, right?

So, here’s the deal: this paper introduces the Transformer model, and it totally flips the script on how we process data. Instead of relying on traditional recurrence like older models, it goes all-in on self-attention. This is a game-changer because self-attention means the model can process input sequences in parallel. Translation? It’s faster, it scales way better, and it just outperforms the older models in pretty much every way.

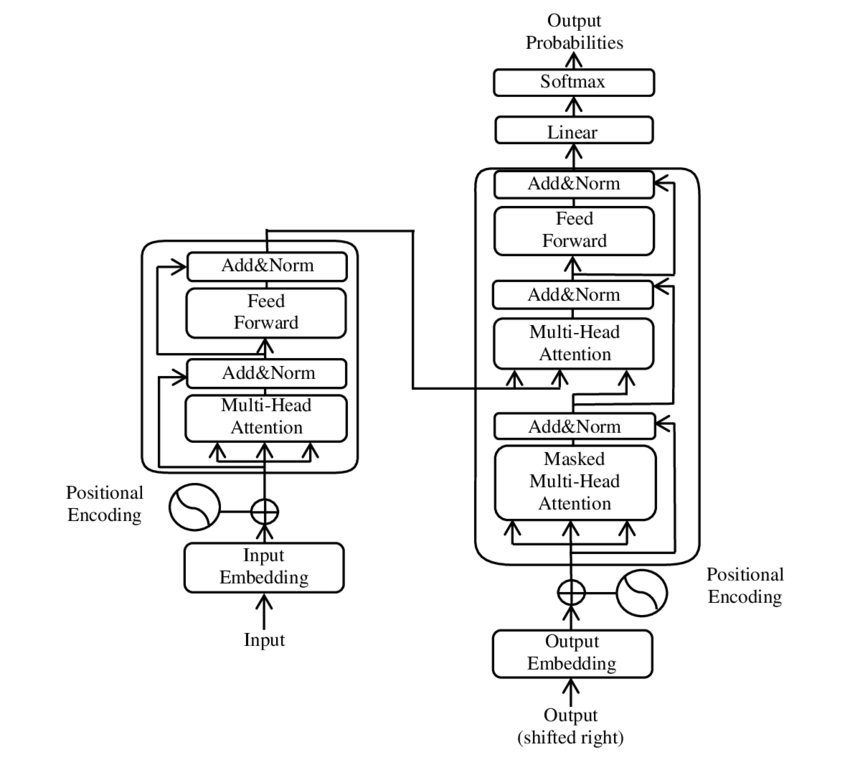

Now let’s talk about the Transformer’s core setup. It’s based on an encoder-decoder architecture. The encoder handles the input sequence, while the decoder generates the output based on the encoder’s work and its own past outputs. But the real magic is in the Multi-Head Attention Mechanism - this is what lets the model focus on different parts of the input data at the same time. So, rather than just processing one piece at a time like traditional recurrent models, the Transformer can look at everything all at once, making it lightning-fast. And here’s the real mic-drop moment: the introduction of attention completely changes the game. With the multi-headed self-attention mechanism, the Transformer model doesn’t just speed up training - it takes the whole performance bar and smashes it. We’re talking state-of-the-art results on tasks like the WMT 2014 English-to-German and English-to-French translation benchmarks. On the English-to-German task, it hit a BLEU score of 28.4, blowing the previous record out of the water. That’s not just progress - it’s a huge leap forward. —

So yeah, this paper isn’t just a technical blueprint - it’s the foundation of some of the most powerful AI systems we’re using today. And next time you’re chatting with ChatGPT or using an AI model, just know: you’re interacting with the magic of the Transformer. Pretty wild, huh? Catch you in the next one - where we break down more game-changing tech!

tags: ai - gen-ai